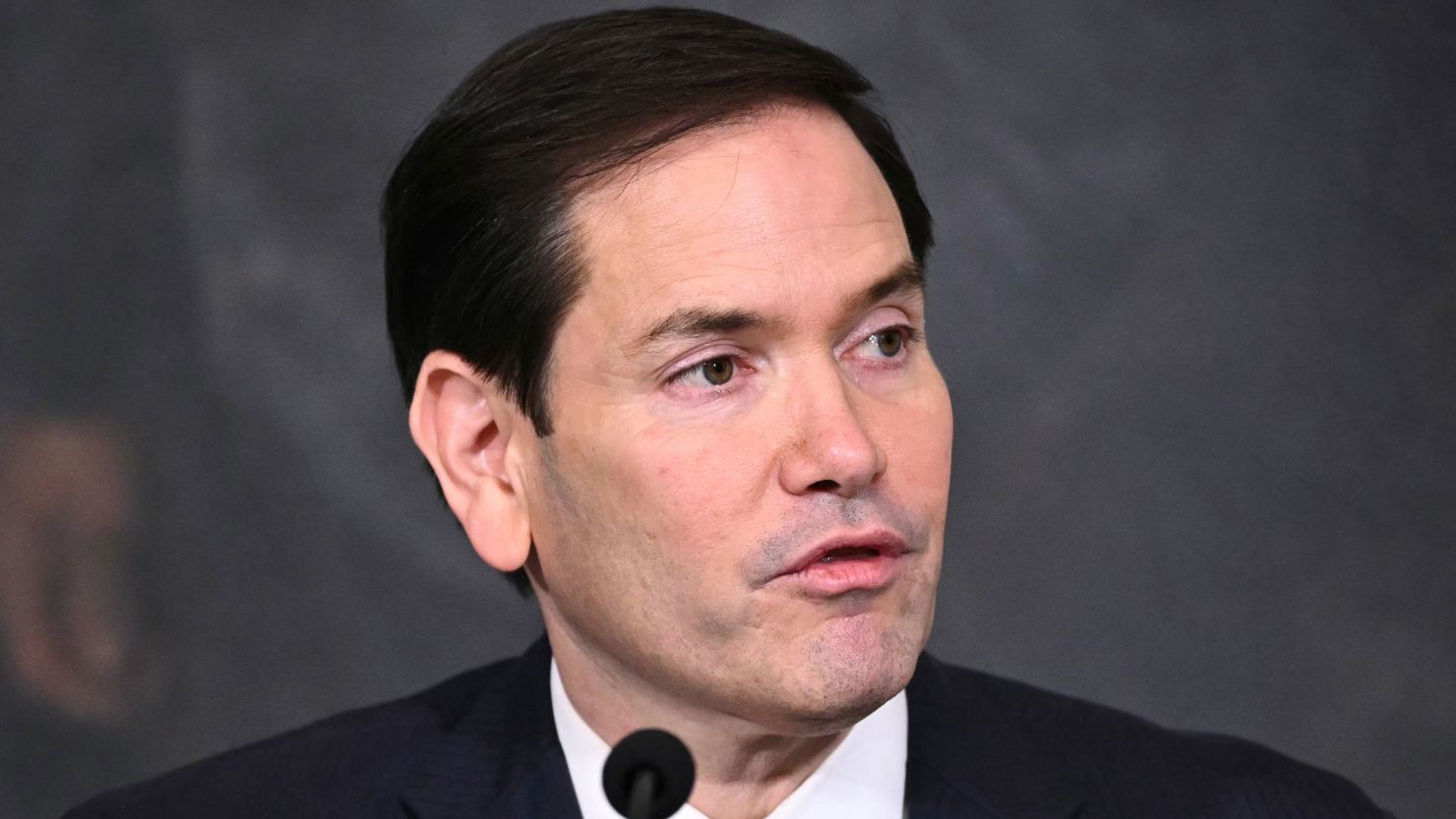

The digital landscape is rapidly evolving, bringing with it both incredible advancements and significant new threats. Among the most concerning of these threats is the rise of AI-powered impersonation, often referred to as “deepfakes.” These sophisticated manipulations of audio and video are becoming increasingly realistic, making it difficult for the average person to distinguish between genuine content and fabricated deception. A prime example that sent shockwaves through the political world was the recent Rubio AI Impersonator Scandal, an incident that starkly highlighted the urgent need for heightened vigilance and robust countermeasures.

This particular scandal involved the creation of synthetic audio designed to mimic Senator Marco Rubio’s voice, disseminating misleading information. It wasn’t just a technical curiosity; it was a potent demonstration of how artificial intelligence could be weaponized to sow discord, manipulate public opinion, and undermine the very foundations of democratic processes. As we delve into the details of the Rubio AI Impersonator Scandal, we uncover the critical vulnerabilities that modern technology, left unchecked, can expose.

What Exactly Happened in the Rubio AI Impersonator Scandal?

The Anatomy of a Deepfake Deception

The incident at the heart of the Rubio AI Impersonator Scandal unfolded when an AI-generated audio clip, meticulously crafted to sound like Senator Marco Rubio, began circulating. This synthetic audio featured the senator ostensibly making controversial statements or expressing views inconsistent with his known political stances. The objective was clear: to create confusion, erode trust in public figures, and potentially influence voters by presenting a false narrative.

- Sophisticated Voice Cloning: The impersonation wasn’t a simple sound-alike; it was a highly sophisticated example of voice cloning, capable of replicating not just the tone and timbre but also the unique speech patterns and inflections of Senator Rubio.

- Targeted Dissemination: The fabricated content was likely disseminated through various digital channels, including social media platforms, designed to reach a broad audience before its authenticity could be questioned.

- Immediate Backlash and Exposure: While the initial intent was to deceive, swift action by fact-checkers and digital forensics experts helped expose the fabrication, preventing its wider acceptance and demonstrating the power of collective vigilance against such digital manipulation.

The Technology Behind the Impersonation

The technology enabling such lifelike impersonations has advanced at an astonishing pace. The Rubio AI Impersonator Scandal leveraged sophisticated AI models trained on vast datasets of real audio or video recordings. These models learn to synthesize new content that is virtually indistinguishable from genuine media.

- Generative Adversarial Networks (GANs): Often, deepfakes are created using GANs, where one neural network (the generator) creates synthetic content while another (the discriminator) tries to identify whether the content is real or fake. This adversarial process refines the generator’s output until it’s highly convincing.

- Text-to-Speech and Voice Synthesis: For audio deepfakes like the one in the Rubio AI Impersonator Scandal, advanced text-to-speech (TTS) systems are combined with voice cloning techniques. A small sample of a person’s voice can be enough to generate new speech in their voice, saying anything the creator desires.

- Accessibility of Tools: Worryingly, the tools and algorithms required to create high-quality deepfakes are becoming more accessible, moving from the realm of specialized labs to more widely available software, lowering the barrier to entry for malicious actors.

The Broader Implications for Democracy and Trust

Eroding Public Trust and Spreading Disinformation

The primary danger posed by incidents like the Rubio AI Impersonator Scandal is the profound erosion of public trust. When people can no longer distinguish between truth and fabrication, the very foundation of informed decision-making crumbles. This creates fertile ground for disinformation campaigns that can destabilize political processes, incite social unrest, and manipulate public perception on critical issues.

Moreover, the existence of deepfakes can lead to a phenomenon known as the “liar’s dividend,” where genuine evidence or legitimate information can be dismissed as “fake” by those who wish to discredit it. This makes it significantly harder for journalists, researchers, and public institutions to effectively communicate facts and build consensus.

Political Manipulation and Election Integrity

The ramifications for political campaigns and election integrity are particularly severe. AI deepfakes can be deployed to:

- Discredit Opponents: Create false narratives about candidates, fabricating embarrassing or controversial statements that never occurred.

- Influence Voter Behavior: Disseminate targeted misinformation to specific demographics, swaying opinions during critical election periods.

- Sow Confusion: Overwhelm the information ecosystem with fake content, making it difficult for voters to access and trust reliable sources before casting their ballots.

The Rubio AI Impersonator Scandal serves as a stark warning of what could become a widespread tactic in future elections, posing an unprecedented challenge to the fairness and integrity of democratic processes worldwide.

Detecting and Combating AI Impersonation

Challenges in Detection

Despite advancements in detection technologies, identifying AI-generated content remains a formidable challenge. The rapid pace of AI development means that detection tools often play catch-up to the latest generation of synthetic media. Minor artifacts, inconsistencies in lighting, or subtle vocal distortions that once betrayed a deepfake are becoming increasingly rare as the technology improves.

Furthermore, the sheer volume of digital content makes manual review impractical, requiring automated solutions that are still in their nascent stages of development and accuracy.

Emerging Solutions and Countermeasures

Combating AI impersonation requires a multi-pronged approach involving technological innovation, policy frameworks, and public education:

- AI Detection Tools: Researchers are developing AI-powered tools specifically designed to identify synthetic media by looking for digital fingerprints unique to AI generation.

- Digital Watermarking & Provenance: Techniques like digital watermarking and content provenance initiatives (e.g., C2PA standard) aim to create tamper-proof records of media origin, allowing users to verify if content has been altered or is entirely synthetic.

- Legislation and Regulation: Governments are beginning to explore laws requiring disclosure for AI-generated content, imposing penalties for malicious deepfake creation, and safeguarding against election interference using synthetic media.

- Media Literacy Education: Empowering the public with critical thinking skills and media literacy is crucial. Educating individuals on how to identify suspicious content, verify sources, and be skeptical of sensational claims is a vital defense mechanism.

The Future Landscape: Safeguarding Against AI Deception

The Role of Tech Companies and Governments

Major tech platforms bear a significant responsibility in mitigating the spread of AI deepfakes. This includes investing in robust detection systems, implementing clear policies against malicious synthetic media, and cooperating with law enforcement and research communities. Governments, in turn, must establish clear legal frameworks that address the creation and dissemination of deceptive AI content, especially in the context of elections and national security.

The collaborative effort between private industry, government bodies, and civil society is paramount to building a resilient digital ecosystem where incidents like the Rubio AI Impersonator Scandal can be swiftly identified and contained.

Individual Responsibility and Critical Thinking

Ultimately, individual vigilance forms the frontline defense against AI deception. In an age where digital manipulation is increasingly sophisticated, it is incumbent upon every internet user to cultivate a critical mindset. Before sharing, believing, or acting upon information, especially content that seems sensational or plays into strong emotions, always ask:

- Who created this content?

- What is the source? Is it reputable?

- Does this align with other verifiable information?

- Could this be an AI-generated impersonation?

Being an informed and discerning consumer of digital media is no longer just a good practice; it is an essential civic duty in the face of evolving threats like the Rubio AI Impersonator Scandal.

Lessons Learned from the Rubio AI Impersonator Scandal

The Rubio AI Impersonator Scandal served as a sobering wake-up call, demonstrating the profound and immediate threat that AI-powered disinformation poses to our society. It highlighted that while artificial intelligence offers immense potential for good, its misuse can inflict significant damage on trust, democracy, and individual reputations. The incident underscored the urgent need for a multi-faceted approach involving advanced technological solutions, proactive legislative measures, and widespread public education.

As AI technology continues to advance, so too must our defenses. The battle against synthetic media is ongoing, and incidents like the one involving Senator Rubio remind us that constant vigilance, critical thinking, and a collaborative effort are the only ways to safeguard our information ecosystem from the growing tide of digital deception.