The digital landscape is rapidly evolving, bringing with it both innovation and unprecedented challenges. In a significant advisory, the U.S. government has issued a stark warning regarding escalating attempts to deploy sophisticated **AI Rubio deepfake** content. This grave concern highlights a burgeoning threat where artificial intelligence is weaponized to create highly realistic yet entirely fabricated audio and video, designed to manipulate public perception and sow discord. The focus on a prominent political figure like Senator Marco Rubio underscores the potential for these advanced AI-generated synthetic media to impact national security, election integrity, and public trust. Understanding this evolving danger is crucial for every citizen navigating today’s information environment.

The Urgent Government Advisory on AI Deepfakes

The recent alert from U.S. intelligence agencies and cybersecurity experts marks a critical moment in the fight against digital disinformation. This specific warning about **AI Rubio deepfake** attempts indicates a targeted effort to exploit advanced artificial intelligence for malicious purposes. The government’s concern stems from the potential for these hyper-realistic fabrications to be used to influence political discourse, undermine public figures, and ultimately erode faith in authentic information sources.

Such advisories are not issued lightly; they reflect a growing awareness of the capabilities of deepfake technology, which has moved beyond novelty and into the realm of serious national security threats. The warning serves as a call to action for media organizations, technology platforms, and the public to be more vigilant and discerning about the content they encounter online.

Understanding Deepfake Technology: What Are We Up Against?

Deepfakes are a type of synthetic media where a person in an existing image or video is replaced with someone else’s likeness. This is achieved using powerful artificial intelligence techniques, primarily deep learning, which trains algorithms on vast datasets of a person’s voice, facial expressions, and mannerisms. The result is shockingly convincing:

- Visual Manipulation: Faces can be seamlessly swapped, expressions altered, and body language mimicked to create a video that appears authentic.

- Audio Manipulation: Voices can be cloned with remarkable accuracy, allowing malicious actors to generate speeches, phone calls, or statements that sound exactly like the target individual.

- Sophistication: Modern deepfake technology is becoming increasingly difficult to detect with the naked eye, often requiring specialized analytical tools.

The ease of access to such powerful AI tools, combined with the global reach of the internet, makes deepfakes a potent weapon for those seeking to spread misinformation or destabilize political systems.

Why Target a Figure Like Rubio with AI Deepfakes?

The specific mention of **AI Rubio deepfake** attempts raises questions about the motivations behind such targeted attacks. Prominent political figures, especially those in leadership positions or involved in critical policy debates, are prime targets for disinformation campaigns. The reasons typically include:

- Undermining Credibility: Fabricated content can be used to discredit a politician, damage their reputation, or portray them in a false light.

- Sowing Discord: A fake statement or action attributed to a political leader can ignite public outrage, exacerbate existing divisions, or spark civil unrest.

- Influencing Elections: In the lead-up to elections, deepfakes can be deployed to sway public opinion, suppress voter turnout, or create confusion around key issues.

- Testing Capabilities: Malicious actors might also use high-profile individuals as targets to test the effectiveness of their deepfake technology and distribution networks.

While Senator Rubio is named, the broader implication is that any public figure, from politicians to business leaders and journalists, could become the subject of such digitally manipulated content.

The Far-Reaching Impacts and Risks

The rise of advanced AI deepfakes, exemplified by the **AI Rubio deepfake** warning, carries severe implications that extend beyond individual reputational damage. These digital threats pose significant risks to core societal functions.

Threats to Election Integrity and Democracy

The most immediate and concerning threat posed by AI deepfakes is their potential to undermine democratic processes. During election cycles, the rapid spread of fabricated videos or audio can:

- Manipulate Voters: Voters might be swayed by false statements or actions attributed to candidates, altering their perceptions and voting decisions.

- Sow Distrust: The proliferation of deepfakes can erode public trust in all media, making it difficult for citizens to distinguish truth from falsehood, thereby creating widespread cynicism about political discourse.

- Disrupt Campaigns: Opposing campaigns could be forced to divert resources to debunk fabricated content, distracting from their core messages.

- Suppress Participation: Confusion and frustration generated by deepfakes could lead to voter apathy or discourage participation in the democratic process.

This environment of pervasive distrust makes it harder for a functioning democracy to thrive.

Implications for National Security

Beyond elections, the strategic deployment of deepfakes presents a clear national security risk. State and non-state actors could leverage these tools to:

- Create International Incidents: A fabricated video of a world leader making inflammatory remarks could escalate diplomatic tensions or even incite conflict.

- Propaganda and Psychological Warfare: Deepfakes are powerful tools for spreading propaganda, disrupting social cohesion, and engaging in psychological operations against adversary nations.

- Intelligence Operations: They can be used to impersonate officials to gain access to sensitive information or influence intelligence gathering.

- Economic Sabotage: Fabricated announcements from business leaders or government officials could trigger stock market crashes or damage economic stability.

The ability to credibly impersonate key figures represents a new frontier in cyber warfare and intelligence operations.

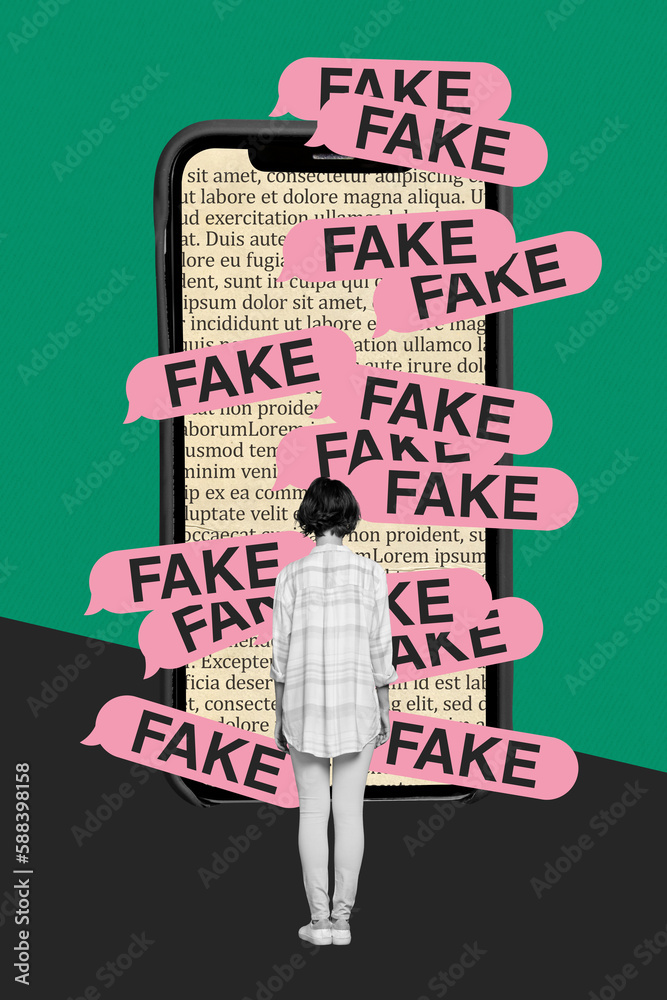

How to Identify AI Rubio Deepfakes and Other Synthetic Media

While deepfake technology is rapidly advancing, there are still tell-tale signs that can help discerning viewers identify fabricated content. Public awareness and critical media literacy are our first lines of defense against pervasive misinformation, including potential **AI Rubio deepfake** attempts.

When encountering suspicious content, consider these red flags:

- Unnatural Movements or Expressions: Look for awkward or jerky movements, inconsistent facial expressions, or a lack of natural blinking. Often, the eyes can appear unusually still.

- Audio Sync Issues: The audio may not perfectly synchronize with the mouth movements, or the voice might sound unnatural, robotic, or have an odd cadence.

- Inconsistent Lighting or Shadows: Pay attention to the lighting on the person’s face compared to the background. Deepfakes can sometimes have unnatural shadows or highlights.

- Pixelation or Blurring: While high-quality deepfakes are smooth, lower-quality ones might show subtle blurring around the edges of the manipulated person, or inconsistencies in resolution.

- Bizarre Backgrounds: Sometimes, the background might contain anomalies or distortions that betray the digital manipulation.

- Source Verification: Always question the source of the information. Is it from a reputable news organization? Has the content been shared by official channels?

- Check Multiple Sources: If something seems too shocking or unbelievable, verify the information with multiple, diverse, and trusted news sources before sharing.

Technologies are also emerging to help detect deepfakes, but human vigilance remains paramount.

Government Response and Public Awareness Initiatives

The U.S. government, alongside global partners, is actively responding to the threat of deepfakes. Beyond issuing specific warnings like the one concerning **AI Rubio deepfake** attempts, efforts are underway on multiple fronts:

- Legislation and Policy: Lawmakers are exploring new laws to combat the creation and dissemination of malicious deepfakes, potentially criminalizing their use for deceptive purposes.

- Technological Development: There’s significant investment in developing advanced deepfake detection tools, including AI-powered forensics that can identify subtle digital artifacts.

- Industry Collaboration: Governments are working with tech giants and social media platforms to implement better content moderation policies, flagging systems, and rapid response mechanisms.

- Public Education: Campaigns are being launched to enhance media literacy, teaching citizens how to identify and critically evaluate digital content.

These combined efforts aim to build a more resilient information ecosystem and equip the public with the tools needed to navigate an increasingly complex online world.

Protecting Yourself Online in the Age of AI Deepfakes

As the sophistication of digital threats like the **AI Rubio deepfake** attempts grows, individual responsibility in consuming and sharing information becomes more critical than ever. Here are actionable steps you can take to protect yourself and contribute to a healthier online environment:

- Practice Skepticism: Approach all online content, especially sensational or emotionally charged material, with a healthy dose of skepticism.

- Verify Before You Share: Never share content without verifying its authenticity, especially if it comes from an unknown or unverified source.

- Utilize Fact-Checking Sites: Familiarize yourself with reputable fact-checking organizations and tools that can help debunk false claims and deepfakes.

- Stay Informed: Keep abreast of the latest news and advisories from official government sources and cybersecurity experts regarding emerging digital threats.

- Report Suspicious Content: Many social media platforms have reporting mechanisms for misinformation or manipulated media. Use them.

- Enhance Your Digital Literacy: Continuously educate yourself on how digital media can be manipulated and how to critically analyze online information.

By adopting these habits, individuals become active participants in combating disinformation rather than passive recipients.

Conclusion: Vigilance is Key in the Face of AI Deepfakes

The U.S. government’s explicit warning about **AI Rubio deepfake** attempts serves as a stark reminder of the evolving and severe threats posed by advanced artificial intelligence. Deepfake technology has moved from a futuristic concept to a present danger, capable of eroding trust, manipulating public opinion, and destabilizing national security.

As AI continues to advance, so too will the sophistication of malicious synthetic media. The collective effort of governments, technology companies, and individual citizens is paramount. By fostering critical thinking, promoting media literacy, and supporting robust detection and mitigation strategies, we can build a more resilient defense against the pervasive spread of AI-generated disinformation. Staying informed and exercising caution are not just recommendations; they are essential responsibilities in safeguarding our information environment and the integrity of our democratic processes.